Lysario – by Panagiotis Chatzichrisafis

"ούτω γάρ ειδέναι το σύνθετον υπολαμβάνομεν, όταν ειδώμεν εκ τίνων και πόσων εστίν …"

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 35 exercise 2.18

Author: Panagiotis22 Aug

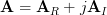

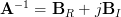

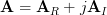

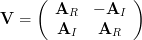

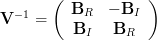

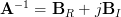

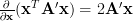

In [1, p. 35 exercise 2.18] we are asked to prove that the inverse of a complex matrix  may be found by first inverting

may be found by first inverting

to yield

and then letting .

read the conclusion >

.

read the conclusion >

may be found by first inverting

may be found by first inverting

| (1) | ||

to yield

| (2) | ||

and then letting

.

read the conclusion >

.

read the conclusion >

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 35 exercise 2.17

Author: Panagiotis16 Aug

In [1, p. 35 exercise 2.17] we are asked to verify the alternative expression [1, p. 33 (2.77)] for a hermitian function.

read the conclusion >

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 35 exercise 2.16

Author: Panagiotis12 Aug

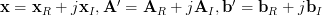

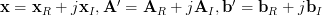

In [1, p. 35 exercise 2.16] we are asked to verify the formulas given for the complex gradient of a hermitian and a linear form [1, p. 31 (2.70)]. To do so, we are instructed to decompose the matrices and vectors into their real and imaginary parts as

read the conclusion >

read the conclusion >

read the conclusion >

read the conclusion >

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 35 exercise 2.15

Author: Panagiotis25 Jun

In [1, p. 35 exercise 2.15] we are asked to verify the formulas given for the gradient of a quadratic and linear form [1, p. 31 (2.61)].

The corresponding formulas are

and

where is a symmetric

is a symmetric  matrix with elements

matrix with elements  and

and  is a real

is a real  vector with elements

vector with elements  and

and  denotes the gradient of a real function in respect to

denotes the gradient of a real function in respect to

.

read the conclusion >

.

read the conclusion >

| (1) | ||

and

| (2) | ||

where

is a symmetric

is a symmetric  matrix with elements

matrix with elements  and

and  is a real

is a real  vector with elements

vector with elements  and

and  denotes the gradient of a real function in respect to

denotes the gradient of a real function in respect to

.

read the conclusion >

.

read the conclusion >

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 35 exercise 2.14

Author: Panagiotis28 Mai

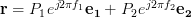

In [1, p. 35 exercise 2.14] we are asked to consider the solution to the set of linear equations

where is a

is a  hermitian matrix given by:

hermitian matrix given by:

and is a complex

is a complex  vector given by

vector given by

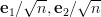

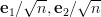

The complex vectors are defined in [1, p.22, (2.27)]. Furthermore we are asked to assumed that ,

,  for

for  are distinct integers in the range

are distinct integers in the range ![[-n/2,n/2-1]](https://lysario.de/wp-content/cache/tex_24916734a0a5230271c84429761581eb.png) for

for  even and

even and ![[-(n-1)/2,(n-1)/2]](https://lysario.de/wp-content/cache/tex_b9ee4eee41d27a8e5eea256f738e00e2.png) for

for  odd.

odd.  is defined to be a

is defined to be a  vector.

It is requested to show that

vector.

It is requested to show that  is a singular matrix (assuming

is a singular matrix (assuming  ) and that there are infinite number of solutions.

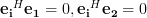

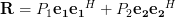

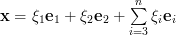

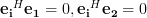

A further task is to find the general solution and also the minimum norm solution of the set of linear equations. The hint provided by the exercise is to note that

) and that there are infinite number of solutions.

A further task is to find the general solution and also the minimum norm solution of the set of linear equations. The hint provided by the exercise is to note that  are eigenvectors of

are eigenvectors of  with nonzero eigenvalues and then to assume a solution of the form

with nonzero eigenvalues and then to assume a solution of the form

where for

for  and solve for

and solve for  .

read the conclusion >

.

read the conclusion >

| (1) | ||

where

is a

is a  hermitian matrix given by:

hermitian matrix given by:

| (2) | ||

and

is a complex

is a complex  vector given by

vector given by

| (3) | ||

The complex vectors are defined in [1, p.22, (2.27)]. Furthermore we are asked to assumed that

,

,  for

for  are distinct integers in the range

are distinct integers in the range ![[-n/2,n/2-1]](https://lysario.de/wp-content/cache/tex_24916734a0a5230271c84429761581eb.png) for

for  even and

even and ![[-(n-1)/2,(n-1)/2]](https://lysario.de/wp-content/cache/tex_b9ee4eee41d27a8e5eea256f738e00e2.png) for

for  odd.

odd.  is defined to be a

is defined to be a  vector.

It is requested to show that

vector.

It is requested to show that  is a singular matrix (assuming

is a singular matrix (assuming  ) and that there are infinite number of solutions.

A further task is to find the general solution and also the minimum norm solution of the set of linear equations. The hint provided by the exercise is to note that

) and that there are infinite number of solutions.

A further task is to find the general solution and also the minimum norm solution of the set of linear equations. The hint provided by the exercise is to note that  are eigenvectors of

are eigenvectors of  with nonzero eigenvalues and then to assume a solution of the form

with nonzero eigenvalues and then to assume a solution of the form

| (4) | ||

where

for

for  and solve for

and solve for  .

read the conclusion >

.

read the conclusion >