Lysario – by Panagiotis Chatzichrisafis

"ούτω γάρ ειδέναι το σύνθετον υπολαμβάνομεν, όταν ειδώμεν εκ τίνων και πόσων εστίν …"

Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”,p. 60 exercise 3.1

Author: Panagiotis10 Okt

In [1, p. 60 exercise 3.1] a  real random vector

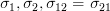

real random vector  is given , which is distributed according to a multivatiate Gaussian PDF with zero mean and covariance matrix:

is given , which is distributed according to a multivatiate Gaussian PDF with zero mean and covariance matrix:

We are asked to find if

if  where

where  is given by the relation:

is given by the relation:

so that and

and  are uncorrelated and hence independent. We are also asked to find the Cholesky decomposition of

are uncorrelated and hence independent. We are also asked to find the Cholesky decomposition of

which expresses

which expresses  as

as  , where

, where  is lower triangular with 1′s on the principal diagonal and

is lower triangular with 1′s on the principal diagonal and  is a diagonal matrix with positive diagonal elements.

is a diagonal matrix with positive diagonal elements.

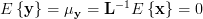

Solution: First we note that

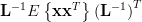

From the previous relation we find that![\mathbf{L}^{-1} = \left[ \begin{array}{cc} 1 & 0 \\ \alpha & 1 \end{array} \right]](https://lysario.de/wp-content/cache/tex_ba8da6f3a962f34c66efc4dfe1f0c44f.png) . Furthermore we note that

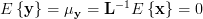

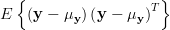

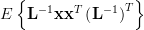

. Furthermore we note that  , because the mean of

, because the mean of  equals zero:

equals zero:  . The correlation matrix of

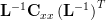

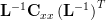

. The correlation matrix of  can be determined by:

can be determined by:

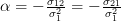

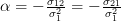

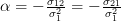

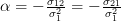

We can determine in order for

in order for  and

and  to be uncorrelated. The two variables are uncorrelated when the off diagonal elements are zero. Thus for

to be uncorrelated. The two variables are uncorrelated when the off diagonal elements are zero. Thus for  the variables

the variables  are uncorrelated, which is only possible when

are uncorrelated, which is only possible when  . Because the random variables

. Because the random variables  are real the condition

are real the condition  is always fulfilled.

For

is always fulfilled.

For  the matrix

the matrix  can be rewritten as:

can be rewritten as:

We know that Gaussian random variables are independent if they are uncorrelated [2, p. 154-155]. So far we have only found a condition for in order to render

in order to render  uncorrelated. From [1, p.42] we know that the random variables

uncorrelated. From [1, p.42] we know that the random variables  are also Gaussian as they are obtained by a linear transform from the Gaussian random variables

are also Gaussian as they are obtained by a linear transform from the Gaussian random variables  . Thus we have found the condition for

. Thus we have found the condition for  in order for

in order for  to be uncorrelated and thus independent.

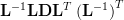

Now let us proceed to find the Cholesky decomposition of

to be uncorrelated and thus independent.

Now let us proceed to find the Cholesky decomposition of  . We first assume that

. We first assume that  are such that

are such that  is positive definite, a condition which is necessary to decompose the correlation matrix

is positive definite, a condition which is necessary to decompose the correlation matrix  by Cholesky’s decomposition:

by Cholesky’s decomposition:

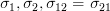

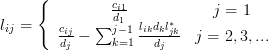

where the elements of the matrix , assuming

, assuming  are the elements of the matrix

are the elements of the matrix  , are given by [1, p.30, (2.55)] (see also [3, relations (10) and (11) ]):

, are given by [1, p.30, (2.55)] (see also [3, relations (10) and (11) ]):

and the elements of the matrix are given by the relation:

are given by the relation:

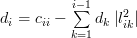

The corresponding elements of the matrices and

and  are thus given by:

are thus given by:

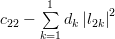

Thus the matrices are given by:

are given by:

And the matrix is obtained by the Cholesky decomposition as

is obtained by the Cholesky decomposition as  .

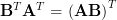

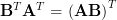

While, considering [1, p. 23, (2.29)] that for two matrices

.

While, considering [1, p. 23, (2.29)] that for two matrices  , the matrix

, the matrix  is given by:

is given by:

[1] Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”, Prentice Hall, ISBN: 0-13-598582-X.

[2] Papoulis, Athanasios: “Probability, Random Variables, and Stochastic Processes”, McGraw-Hill.

[3] Chatzichrisafis: “Solution of exercise 2.12 from Kay’s Modern Spectral Estimation - Theory and Applications”.

real random vector

real random vector  is given , which is distributed according to a multivatiate Gaussian PDF with zero mean and covariance matrix:

is given , which is distributed according to a multivatiate Gaussian PDF with zero mean and covariance matrix:

![\mathbf{C}_{xx}=

\left[

\begin{array}{cc}

\sigma_{1}^2 & \sigma_{12} \\

\sigma_{21} & \sigma_{2}^2

\end{array}\right]](https://lysario.de/wp-content/cache/tex_372170732f621d352bb5f0949e11915d.png) | |||

We are asked to find

if

if  where

where  is given by the relation:

is given by the relation:  |  |  | |

|  |  |

so that

and

and  are uncorrelated and hence independent. We are also asked to find the Cholesky decomposition of

are uncorrelated and hence independent. We are also asked to find the Cholesky decomposition of

which expresses

which expresses  as

as  , where

, where  is lower triangular with 1′s on the principal diagonal and

is lower triangular with 1′s on the principal diagonal and  is a diagonal matrix with positive diagonal elements.

is a diagonal matrix with positive diagonal elements.

Solution: First we note that

|  | ![\left[ \begin{array}{cc}y_{1}& y_{2} \end{array}\right]^{T}](https://lysario.de/wp-content/cache/tex_c02d4224fa5cf944c58863ca9bc8a75a.png) | |

| ![\left[ \begin{array}{cc} 1 & 0 \\ \alpha & 1 \end{array} \right] \mathbf{x}](https://lysario.de/wp-content/cache/tex_60fe53a785493828d8c2dea1e900353d.png) |

From the previous relation we find that

![\mathbf{L}^{-1} = \left[ \begin{array}{cc} 1 & 0 \\ \alpha & 1 \end{array} \right]](https://lysario.de/wp-content/cache/tex_ba8da6f3a962f34c66efc4dfe1f0c44f.png) . Furthermore we note that

. Furthermore we note that  , because the mean of

, because the mean of  equals zero:

equals zero:  . The correlation matrix of

. The correlation matrix of  can be determined by:

can be determined by:

|  |  | |

|  | ||

|  | ||

|  | ||

|  | ||

| ![\left[ \begin{array}{cc} 1 & 0 \\ \alpha & 1 \end{array} \right] \left[\begin{array}{cc} \sigma_{1}^2 & \sigma_{12} \\ \sigma_{21} & \sigma_{2}^2 \end{array}\right] \left[ \begin{array}{cc} 1 & \alpha \\ 0 & 1 \end{array} \right]](https://lysario.de/wp-content/cache/tex_0f879e754b539878aa14c425cb12861f.png) | ||

| ![\left[ \begin{array}{cc} 1 & 0 \\ \alpha & 1 \end{array} \right] \left[\begin{array}{cc} \sigma_{1}^2 & \alpha \sigma_{1}^2 +\sigma_{12} \\ \sigma_{21} & \alpha \sigma_{21} + \sigma_{2}^2 \end{array}\right]](https://lysario.de/wp-content/cache/tex_21b592333019be3ac1a38c55b4b89be9.png) | ||

| ![\left[\begin{array}{cc} \sigma_{1}^2 & \alpha \sigma_{1}^2 +\sigma_{12} \\ \alpha \sigma_{1}^{2} + \sigma_{21} & \alpha^2 \sigma_{1}^2 + \alpha ( \sigma_{12} + \sigma_{21}) + \sigma_{2}^2 \end{array}\right]](https://lysario.de/wp-content/cache/tex_aa612e0e83c2883d618768da63587071.png) |

We can determine

in order for

in order for  and

and  to be uncorrelated. The two variables are uncorrelated when the off diagonal elements are zero. Thus for

to be uncorrelated. The two variables are uncorrelated when the off diagonal elements are zero. Thus for  the variables

the variables  are uncorrelated, which is only possible when

are uncorrelated, which is only possible when  . Because the random variables

. Because the random variables  are real the condition

are real the condition  is always fulfilled.

For

is always fulfilled.

For  the matrix

the matrix  can be rewritten as:

can be rewritten as:

|  | ![\left[\begin{array}{cc} \sigma_{1}^2 & 0 \\ 0 & \frac{\sigma_{21}^2}{\sigma_{1}^{4}} \sigma_{1}^{2} + -\frac{ \sigma_{21}}{ \sigma_{1}^2} ( 2 \sigma_{21}) + \sigma_{2}^2 \end{array}\right]](https://lysario.de/wp-content/cache/tex_dad5da696aeb955156ef17afcde0bb34.png) | |

| ![\left[\begin{array}{cc} \sigma_{1}^2 & 0 \\ 0 & \sigma_{2}^2 + \frac{\sigma_{21}^2}{\sigma_{1}^{2}} - 2 \frac{ \sigma_{21}^{2}}{ \sigma_{1}^2} \end{array}\right]](https://lysario.de/wp-content/cache/tex_224886eb794c8c54ccfd8312c720d4ef.png) | ||

| ![\left[\begin{array}{cc} \sigma_{1}^2 & 0 \\ 0 & \sigma_{2}^2 - \frac{ \sigma_{21}^{2}}{ \sigma_{1}^2} \end{array}\right]](https://lysario.de/wp-content/cache/tex_4a8cb460e39e2b0eee684b5954c40718.png) |

We know that Gaussian random variables are independent if they are uncorrelated [2, p. 154-155]. So far we have only found a condition for

in order to render

in order to render  uncorrelated. From [1, p.42] we know that the random variables

uncorrelated. From [1, p.42] we know that the random variables  are also Gaussian as they are obtained by a linear transform from the Gaussian random variables

are also Gaussian as they are obtained by a linear transform from the Gaussian random variables  . Thus we have found the condition for

. Thus we have found the condition for  in order for

in order for  to be uncorrelated and thus independent.

Now let us proceed to find the Cholesky decomposition of

to be uncorrelated and thus independent.

Now let us proceed to find the Cholesky decomposition of  . We first assume that

. We first assume that  are such that

are such that  is positive definite, a condition which is necessary to decompose the correlation matrix

is positive definite, a condition which is necessary to decompose the correlation matrix  by Cholesky’s decomposition:

by Cholesky’s decomposition:

| |||

where the elements of the matrix

, assuming

, assuming  are the elements of the matrix

are the elements of the matrix  , are given by [1, p.30, (2.55)] (see also [3, relations (10) and (11) ]):

, are given by [1, p.30, (2.55)] (see also [3, relations (10) and (11) ]):

| |||

and the elements of the matrix

are given by the relation:

are given by the relation:

| |||

The corresponding elements of the matrices

and

and  are thus given by:

are thus given by:

|  |  | |

|  |  | |

|  |  | |

|  |  | |

|  | ||

|  | ||

|  | ||

|  |  | |

|  | ||

|  | ||

| |||

|  |  | |

|  | ||

|  |

Thus the matrices

are given by:

are given by:

|  | ![\left[

\begin{array}{cc}

1 & 0\\

\frac{\sigma_{21}}{\sigma_{1}^{2}} & 1

\end{array}

\right]](https://lysario.de/wp-content/cache/tex_ce262427f98ba182a6ab51cd9dae2d8c.png) | |

|  | ![\left[

\begin{array}{cc}

\sigma_{1}^{2} & 0\\

0 & \sigma_{2}^{2} -\frac{\sigma_{21}^{2}}{\sigma_{1}^{2}}

\end{array}

\right]](https://lysario.de/wp-content/cache/tex_5ea8a46fb2e8d1d2fd8ea75edcd9d510.png) |

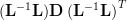

And the matrix

is obtained by the Cholesky decomposition as

is obtained by the Cholesky decomposition as  .

While, considering [1, p. 23, (2.29)] that for two matrices

.

While, considering [1, p. 23, (2.29)] that for two matrices  , the matrix

, the matrix  is given by:

is given by:

|  |  | |

|  | ||

|  | ||

|  |

[1] Steven M. Kay: “Modern Spectral Estimation – Theory and Applications”, Prentice Hall, ISBN: 0-13-598582-X.

[2] Papoulis, Athanasios: “Probability, Random Variables, and Stochastic Processes”, McGraw-Hill.

[3] Chatzichrisafis: “Solution of exercise 2.12 from Kay’s Modern Spectral Estimation - Theory and Applications”.

Leave a reply